This post is the first installment of a series that covers different aspects of Big Data Pipelines. In this post I’ll present what a Big Data pipeline looks like, and discuss a few major challenges building such.

What is a Data Pipeline?

A data pipeline is a system composed of a set of jobs that ingests data from a variety of sources. It then moves the data collected into other means of storage for future use. For example, a Data Warehouse to serve BI systems or Hadoop cluster for data scientists use.

Traditional data pipelines are characterized as batch processing of structured or semi-structured data.

Got It, But What Is Big Data Pipeline?

In the last decades, the amount of data generated and collected is growing significantly and is accelerating. A Big Data pipeline uses tools that offer the ability to analyze data efficiently and address more requirements than the traditional data pipeline process. For example, real-time data streaming, unstructured data, high-velocity transactions, higher data volumes, real-time dashboards, IoT devices, and so on.

Big Data Pipeline Challenges

Technological Arms Race

Not too long ago, data pipelines were based on on-prem batch processing. Most organizations used their free computing and database resources to perform nightly batches of ETL jobs at the office off-hour. That is why, for example, you used to see your bank account balance updated just the day after you make an ATM cash withdraw.

Historically, most companies were using one out of a few standard tools for the data pipeline process, such as Informatica or SSIS; others used in-house development of hundreds of SQL stored procedures. Back then, there were only a few tools that could do the job.

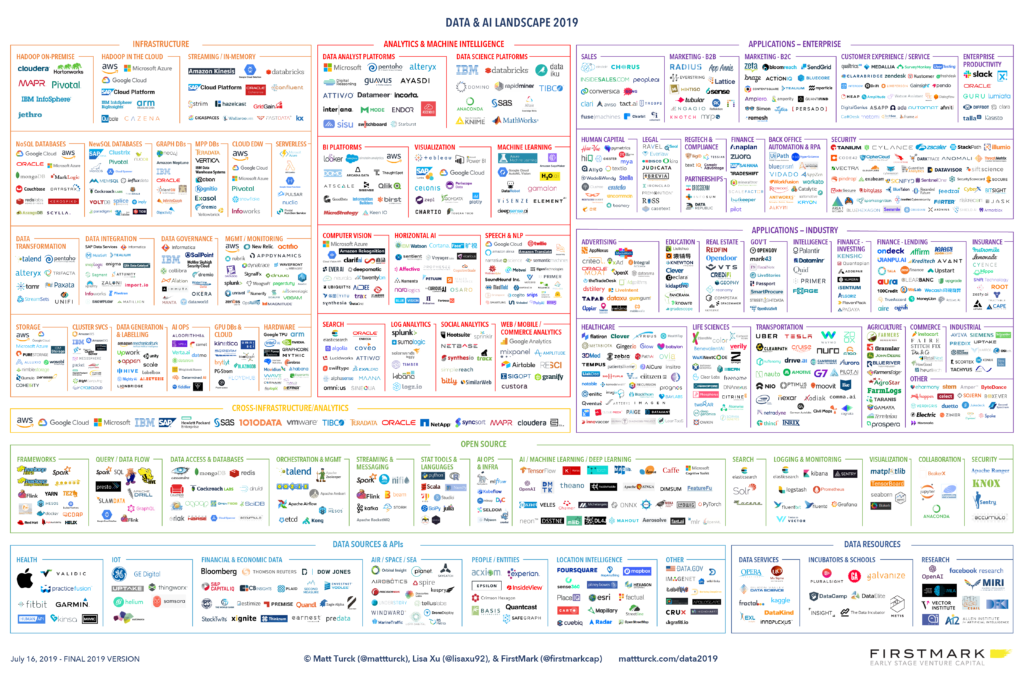

Nowadays, it is not that simple. There are dozens of tools that have been developed over the past few years to solve the traditional data-processing limits that can’t handle the velocity, volume, and variety of the data.

Today, companies that need to build a resilient and robust data pipeline need to hire experienced and knowledgeable engineers in a wide range of technologies and programming languages.

See the Data & AI technology Landscape (2019) by Matt Turck:

Growing Pains

The amount of data created by every one of us each year is growing faster than ever before, and a similar trend can be found in most organizations worldwide.

Data volumes in companies can vary significantly from one month to the next. This is caused by new or changing business requirements, an increase/decrease in the number of customers, and more. Many business sectors experience seasonal data peaks due to holidays, sports events, and so on.

Thus, on-going configuring and managing the underlying compute and storage resources are mandatory. Think about companies like Netflix, Spotify, eBay, and Amazon, that needs to reach the demand of millions of customers globally. They handle scale horizontally, vertically, up, and down on an hourly basis.

The Grunt-Work

On-going configuring and managing data pipeline resources is not an easy job. A continuous process of updates, patches, backups, and upgrades of servers, databases, and applications takes time and human resources.

Some of these upgrades/updates need attention due to deprecated software versions that are no longer supported, and others need patching for new features that only exist in later versions than the ones being used.

Such processes need careful planning and execution. It is essential to know how this new version will affect every component in the system, like dependencies, feature removal, and so on.

Beware The Monolith Hell

Organizations can build Data Pipelines in many ways while using a collection of tools. The tools can vary widely even with the same end requirements. Organizations need to build a resilient and robust pipeline while ensuring the design is as decoupled as possible. That effort requires longer development time and knowledgeable developers, and that is the reason many companies end up with one or more servers containing all the company’s transaction databases, data warehouses, data lake, web servers, APIs, and so on.

While it may seem at first that building data pipelines this way may be a fast and easy solution, it could result in storage or compute failure. These failures could lead to the organization’s data becoming unavailable for a few hours, or worse; the organization ends up with data loss and denial of service errors that result in losing consumers trust and money.

Restoring system processes takes a long time, if even possible, and making changes due to changing or new business requirements will take 5 to 10 times longer.

To Be Continued

In the next post titled “Design Principles for Big Data Pipelines,” I will present a generic architecture Principle for Big Data Pipeline base on best practices, Stay tuned!